FAST Ultrasound Trauma Detection on Edge Device

AI Assisted Evaluation of Focused Assessment with Sonography in Trauma

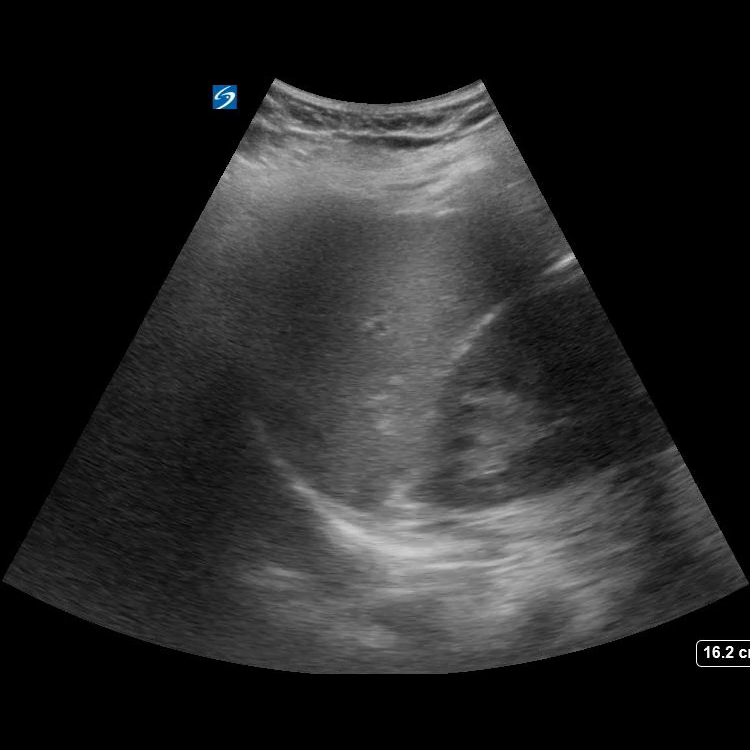

CAAI developed an AI model for the detection of ultrasound image adequacy and positivity for the FAST exam (Focused Assessment with Sonography in Trauma [1]). The results are accepted for publication in the Journal of Trauma and Acute Care Surgery.

FAST is an ultrasound protocol used by over 96% of trauma 1 medical centers to identify the location of life-threatening hemorrhage in a hemodynamically unstable trauma patient. This project provides an AI model to assist in interpretation of the FAST examination abdominal views, as it pertains to adequacy of the view and accuracy of fluid survey positivity. FAST images were acquired from the UK Chandler Medical Center, a quaternary care level 1 trauma center with more than 3,500 adult trauma evaluations. The model can detect positivity and adequacy of FAST examinations with 94% and 97% accuracy, aiding in the standardization of care delivery with minimal expert clinician input. This work demonstrates AI as a feasible modality to improve patient care imaging interpretation accuracy and demonstrates its value as a point-of-care clinical decision-making tool.

We deployed the model (based on Densenet-121 [2]) on an edge device (Nvidia Jetson TX2 [3]) with faster-than-realtime performance (on a video, 19 fps versus the expected 15 fps from an ultrasound device) using TensorRT [4] performance optimizations. The model is trained to recognize adequate views of LUQ/RUQ (Left/Right Upper Quadrant) and positive views of trauma. The video below demonstrates the model prediction for the adequacy of the view.

The device can be used as a training tool for inexperienced Ultrasound operators to aid them in obtaining better (adequate) views and suggest a probability of a positive FAST test.

The project is done in collaboration with the University of Kentucky Department of Surgery. The annotated data is provided by Brittany E. Levy and Jennifer T. Castle.

[1] https://www.ncbi.nlm.nih.gov/books/NBK470479/

[2] Huang G, Liu Z, van der Maaten L, Weinberger KQ. Densely Connected Convolutional Networks. Proceedings – 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017. 2016;2017-January:2261-2269. DOI: 10.48550/arxiv.1608.06993

[3] https://developer.nvidia.com/embedded/jetson-tx2

[4] https://developer.nvidia.com/tensorrt