LLM Factory

A Specialized, Customizable, Secure Self-Service Tool

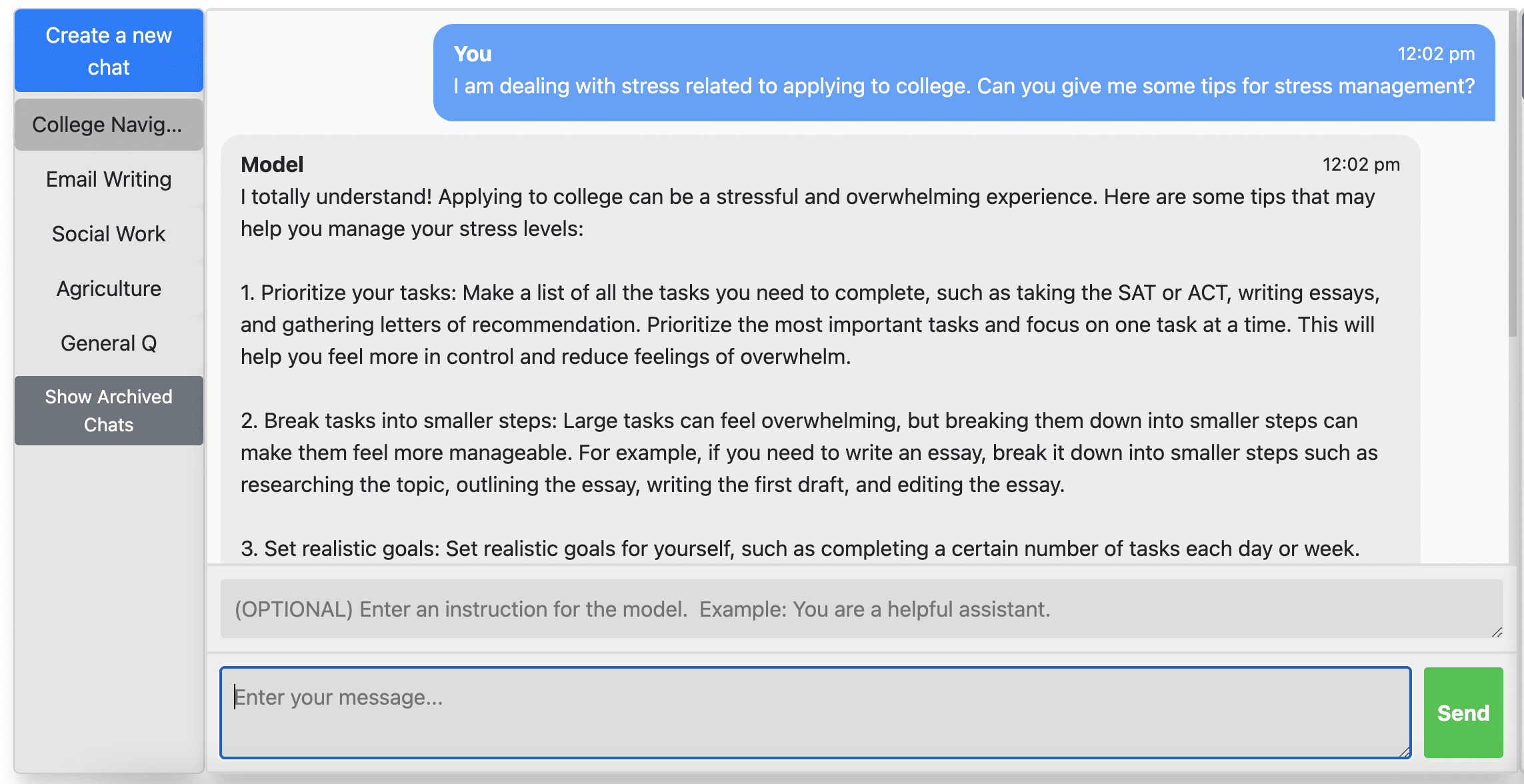

The LLM Factory, developed by the University of Kentucky’s Center for Applied AI, allows users to securely fine-tune their own Large Language Models (LLMs) and query the model on a platform controlled by the University of Kentucky. LLM Factory offers users control, flexibility, and security.

Fine-tuning a model using LLM Factory enables users to develop specialized LLMs tailored to specific projects. With this platform, users can upload their own datasets, train models by layering data on a base model, privately configure and explore models, and expose models to external, OpenAI compatible API. For those concerned about the security of private data, LLM Factory offers a solution. Use LLM Factory as an alternative to public models and keep your data secure.

Use the API feature to embed the model (either the fine-tuned model or the base model) into your projects. LLM Factory’s base model is currently Meta LLaMA 3 8B (as of April 2024).

Interact with Llama 3, our current base/foundational model, here: https://hub.ai.uky.edu/llm-chat/

Citation

A paper detailing the development and usage of this tool can be found here:

| arXiv:2402.00913 |

More Information

Additional information, including a technical overview and user guide documentation can be found here: https://hub.ai.uky.edu/llm-factory/

What supports LLM Factory?

The Center for Applied AI functions within the Institute for Biomedical Informatics. Efforts are supported by the University of Kentucky College of Medicine.

Why use LLM Factory?

Customization and Flexibility

LLM Factory allows users to fine-tune their own LLM. Develop specialized models tailored to a project’s specific needs. By leveraging cutting-edge AI advancements and the latest foundational pre-trained models, users can create models that meet their specific needs.

Security and Privacy

Unlike public platforms, your information and interactions are private on LLM Factory. Datasets uploaded to LLM Factory for adapter training are stored locally on a UK server, and only accessible by you and your team. Fine-tuned models are private, only accessible through a unique configuration ID and personal API Key. All API requests and chat conversations within LLM Factory’s interface are secure.

OpenAI Compatible API

LLM Factory’s API is OpenAI compatible, allowing users to expose their models to external APIs and integrate with a library of tools. This means a range of advanced features, including embeddings, transcriptions, function calling, and more can be easily supported.

Free Access

Mainstream tools like GPT-4 require subscriptions; LLM Factory is free to CAAI collaborators. CAAI can host models at a significantly lower cost because we use on-premises computational resources for inference. To use LLM Factory, simply reach out with a project idea to be granted access. Contact us via email or fill out our collaboration form if you’re interested.

Collaborative Projects using LLM Factory

The following tools are in development:

SpeakEZ – transcription, diarization, summarization, theme extraction

KyStats – code generation and querying databases with natural language

AgriGuide – RAG methods and LangChain tools for community and agricultural specific resources, multi-modal chat and image interface

Population Health – distance learning assistant using conversational LLMs and generating synthetic actors

CELT – RAG methods and LangChain tools integrated into a website to help users navigate and find resources