Time Series Classification with Transformers

Recent studies show that transformer models have numerous contributions to several tasks such as classification, forecasting, and segmentation. This article is based on definitions in the paper ‘Attention is All You Need’. After the publication of this paper, transformer models gained significant popularity and their usage in scientific studies has been rapidly increasing.

In this article, we will explore how to modify a basic transformer model for time series classification tasks and understand the underlying logic of the self-attention mechanism.

First, we can check out the dataset we will use for training our model. It is an available dataset provided by physionet.org and you can download easily the files ‘mitbih_test.csv’ and ‘mitbih_train.csv’ files via the link below.

https://www.kaggle.com/datasets/shayanfazeli/heartbeat?select=mitbih_train.csv

The training dataset contains more than 87000 examples processed in rows, and each example or row consists of 188 columns, the last of which is the label for the classes.

It is time to talk about the modifications I have made to convert this transformer model from a model processing a language translation to a signal classifier. You can find the model used for language translation via the link below.

https://github.com/hyunwoongko/transformer

Also, you can compare the modification I have on this model via the link below will redirect you in my GitHub profile. I kept the file structure identical to compare the differences between the two models.

https://github.com/mselmangokmen/TimeSeriesProject

- The first modification involves removing the masking process. Our goal is not to transform the time series data or generate new sequences, so there is no need for the model to learn the patterns of the input with the help of masking.

- Another modification we made is to eliminate the decoder component of the transformer model. We found that for our classification task, the decoded vector is sufficient. Since our model is not generative, we don’t need a decoder to produce new sequences. Instead, we only need a simple vector with the same dimensions as the number of classes in our dataset (Nx1) to perform the classification task. By adding a classification head at the end of the encoder, we can significantly improve the model’s performance on this task. See below for the implementation of the classification head.

You can see the new transformer model doesn’t include a decoder layer and it is replaced with a classification head

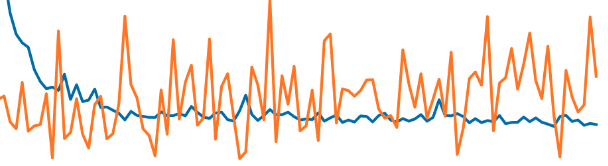

Test and training results are extremely successful compared to the other tasks not employing transformer models.